In recent months, I’ve immersed myself in the world of LLM Agents, exploring the diverse frameworks that power them and dissecting what truly constitutes an effective agent architecture. Throughout this research journey, one aspect has consistently emerged as the most widely misunderstood component: the action phase, or more specifically, the tool calling capability that enables these agents to interact with their environment.

But first a quick recap, What is an Agent ?

Here is an interesting definition to Agent that I came across.

An agent is anything that can be viewed as perceiving its environment through sensors and acting upon that environment through actuators.

— Russell & Norvig, AI: A Modern Approach (2016)

Agents interact with their environment and typically consist of several important components:

- Environments — The world the agent interacts with

- Sensors — Used to observe the environment

- Actuators — Tools used to interact with the environment

- Effectors — The “brain” or rules deciding how to go from observations to actions

In LLM agents, The agent observes the environment through user inputs provided to it’s `brain` (the LLM model) and perform actions to respond to the input, through tools.

The planning capability of LLM is used by the agent to determine which tool to use. The LLM has the ability to “reason” and “think” through methods like “Chain-of-thought”.

Now let’s talk about tools in an agent. What are these tools, why are they provided, and how are they used in agents ?

A Tool is a function that enables a Large Language Model (LLM) to perform an action beyond text generation. These functions fulfill specific objectives that the model couldn’t accomplish on its own.

A common misconception is that LLMs themselves directly execute tool calls. If you understand the fundamentals, you might wonder: “How could a language model make actual tool calls when it’s essentially just an autoregressive token generator?”

Well that’s because they don’t call functions directly. What the LLM does is go through a “Thought” process and decides which tools to call. Through this internal reasoning process it decides which tools or actions to take.

There are categories of agents based on how they perform actions

- JSON Agent

- Code Agent

- Function-calling agent

The LLM only handles text and uses it to describe the action it wants to take and the parameters to supply to the tool.

There are 2 ways agents execute these actions.

The Stop and Parse Approach

- Generation in a Structured Format:

The agent outputs its intended action in a clear, predetermined format (JSON or code).

2. Halting Further Generation:

Once the action is complete, the agent stops generating additional tokens. This prevents extra or erroneous output.

3. Parsing the Output:

An external parser reads the formatted action, determines which Tool to call, and extracts the required parameters.

Code Agents

Instead of outputting a simple JSON object, a Code Agent generates an executable code block — typically in a high-level language like Python.

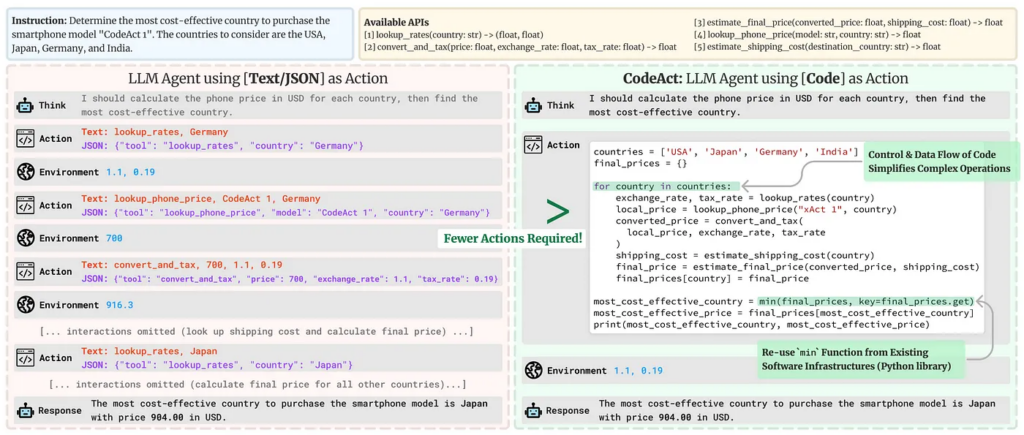

On Benchmarks code agents perform much better than JSON or function calling agents. These agent provide several advantages.

- Expressiveness:

Code can naturally represent complex logic, including loops, conditionals, and nested functions, providing greater flexibility than JSON.

2. Modularity and Reusability:

Generated code can include functions and modules that are reusable across different actions or tasks.

3. Enhanced Debuggability:

With a well-defined programming syntax, code errors are often easier to detect and correct.

4. Direct Integration:

Code Agents can integrate directly with external libraries and APIs, enabling more complex operations such as data processing or real-time decision making.

Out of the two methods Code Agents tend to perform significantly better than the stop and parse approach.

However many products opt against implementing code agents due to their complexity and resource demands. Developing code agents requires specialized programming skills and significant development time, making them less feasible for teams without extensive technical expertise. Additionally, code agents often necessitate ongoing maintenance and updates, which can be resource-intensive.

However, code agents are advantageous when a product requires high customisation, flexibility, and control over AI functionalities. They are well-suited for complex tasks that demand tailored solutions and seamless integration with existing systems. Organisations with dedicated development teams and specific, sophisticated requirements may find code agents to be the most effective solution despite their higher initial investment.

The best approach when developing a system for now is to stick to a JSON blob based tool calling agent and as complexity increases move to a Code agent if necessary.

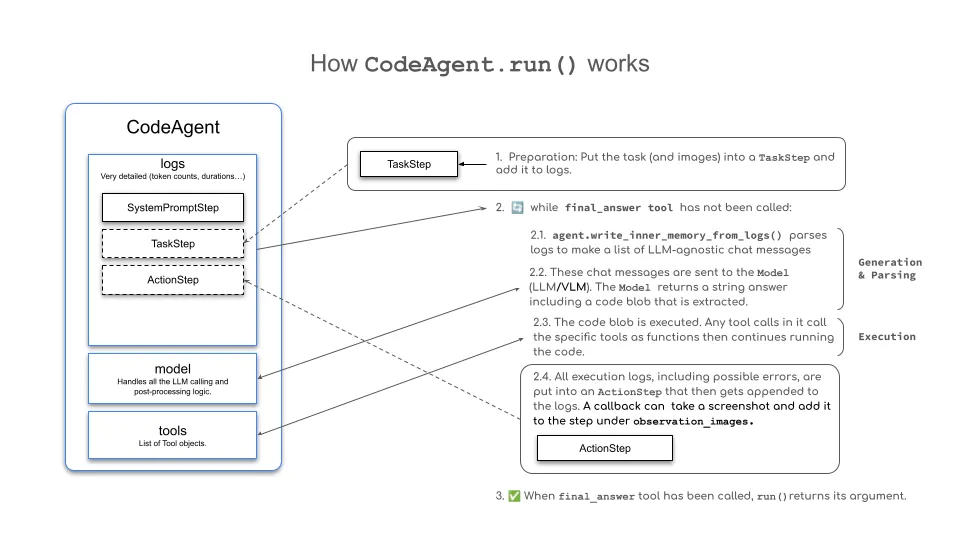

So how does a Code Agent work

CodeAgent performs actions through a cycle of steps, with existing variables and knowledge being incorporated into the agent’s context, which is kept in the execution logs.

Below is a Code Agent that

# ! pip install smolagents

import os

os.environ["OPENAI_API_KEY"] = ""

from typing import Optional

import requests

# from smolagents.agents import ToolCallingAgent

from smolagents import CodeAgent, HfApiModel, tool, LiteLLMModel

# Choose which LLM engine to use!

model = LiteLLMModel(model_id="gpt-4o", )

@tool

def get_weather(location: str, celsius: Optional[bool] = False) -> str:

"""

Get the current weather at the given location using the WeatherStack API.

Args:

location: The location (city name).

celsius: Whether to return the temperature in Celsius (default is False, which returns Fahrenheit).

Returns:

A string describing the current weather at the location.

"""

api_key = "" # Replace with your API key from https://weatherstack.com/

units = "m" if celsius else "f" # 'm' for Celsius, 'f' for Fahrenheit

url = f"http://api.weatherstack.com/current?access_key={api_key}&query={location}&units={units}"

try:

response = requests.get(url)

response.raise_for_status() # Raise an exception for HTTP errors

data = response.json()

if data.get("error"): # Check if there's an error in the response

return f"Error: {data['error'].get('info', 'Unable to fetch weather data.')}"

weather = data["current"]["weather_descriptions"][0]

temp = data["current"]["temperature"]

temp_unit = "°C" if celsius else "°F"

return f"The current weather in {location} is {weather} with a temperature of {temp} {temp_unit}."

except requests.exceptions.RequestException as e:

return f"Error fetching weather data: {str(e)}"

@tool

def get_news_headlines() -> str:

"""

Fetches the top news headlines from the News API for the United States.

This function makes a GET request to the News API to retrieve the top news headlines

for the United States. It returns the titles and sources of the top 5 articles as a

formatted string. If no articles are available, it returns a message indicating that

no news is available. In case of a request error, it returns an error message.

Returns:

str: A string containing the top 5 news headlines and their sources, or an error message.

"""

api_key = "" # Replace with your actual API key from https://newsapi.org/

url = f"https://newsapi.org/v2/top-headlines?country=us&apiKey={api_key}"

try:

response = requests.get(url)

response.raise_for_status()

data = response.json()

articles = data["articles"]

if not articles:

return "No news available at the moment."

headlines = [f"{article['title']} - {article['source']['name']}" for article in articles[:5]]

return "\n".join(headlines)

except requests.exceptions.RequestException as e:

return f"Error fetching news data: {str(e)}"

@tool

def get_time_in_timezone(location: str) -> str:

"""

Fetches the current time for a given location using the World Time API.

Args:

location: The location for which to fetch the current time, formatted as 'Region/City'.

Returns:

str: A string indicating the current time in the specified location, or an error message if the request fails.

Raises:

requests.exceptions.RequestException: If there is an issue with the HTTP request.

"""

url = f"http://worldtimeapi.org/api/timezone/{location}.json"

try:

response = requests.get(url)

response.raise_for_status()

data = response.json()

current_time = data["datetime"]

return f"The current time in {location} is {current_time}."

except requests.exceptions.RequestException as e:

return f"Error fetching time data: {str(e)}"

agent = CodeAgent(

tools=[

get_weather,

get_news_headlines,

get_time_in_timezone,

],

model=model,

)

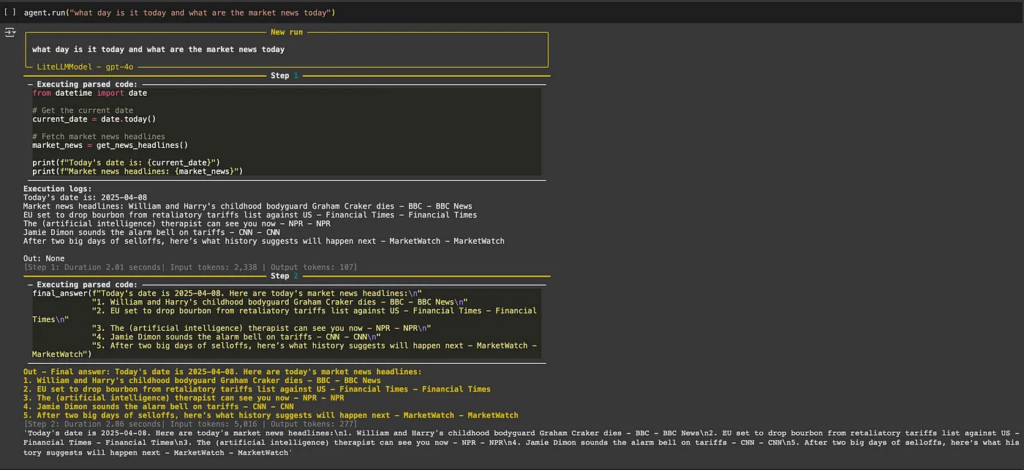

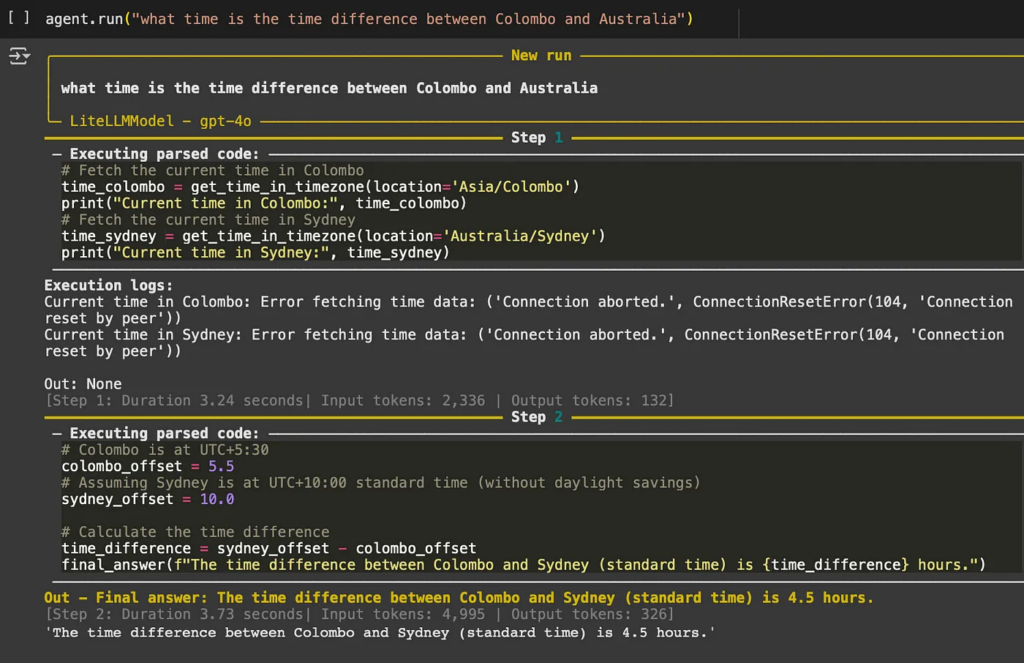

agent.run("what day is it today and what are the market news today")

As You can see from the above code examples and CodeAgent execution logs. Code agents can execute code and perform tasks similar to JSON blob agents but with better efficiency and accuracy. Additionally it performs calculations through code, hence more reliable in calculations and handle fallback much better than a JSON blob agent.

The process by which Code Agent performs a task can be seen below

Once an Agent executes an action it enters the observation phase where it,

- Collects Feedback: Receives data or confirmation that its action was successful (or not).

- Appends Results: Integrates the new information into its existing context, effectively updating its memory.

- Adapts its Strategy: Uses this updated context to refine subsequent thoughts and actions.

This iterative incorporation of feedback ensures the agent remains dynamically aligned with its goals, constantly learning and adjusting based on real-world outcomes.

So for your next project should you consider CodeAgents ?

Personally i think you should consider CodeAgents, but always keep in mind the added complexities when building a Code Agent. Therefore, while CodeAgents offer notable advantages, carefully weigh these complexities to ensure they don’t outweigh the performance benefits.

Written by visith kumarapperuma

AI Engineer @ Convogrid.ai. Exploring the creative edge of machine learning.

0 Comments